@weave.op

def call_model_invoke(

model_id: str,

prompt: str,

max_tokens: int = 100,

temperature: float = 0.7

) -> dict:

body = json.dumps({

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": max_tokens,

"temperature": temperature,

"messages": [

{"role": "user", "content": prompt}

]

})

response = client.invoke_model(

modelId=model_id,

body=body,

contentType='application/json',

accept='application/json'

)

return json.loads(response.get('body').read())

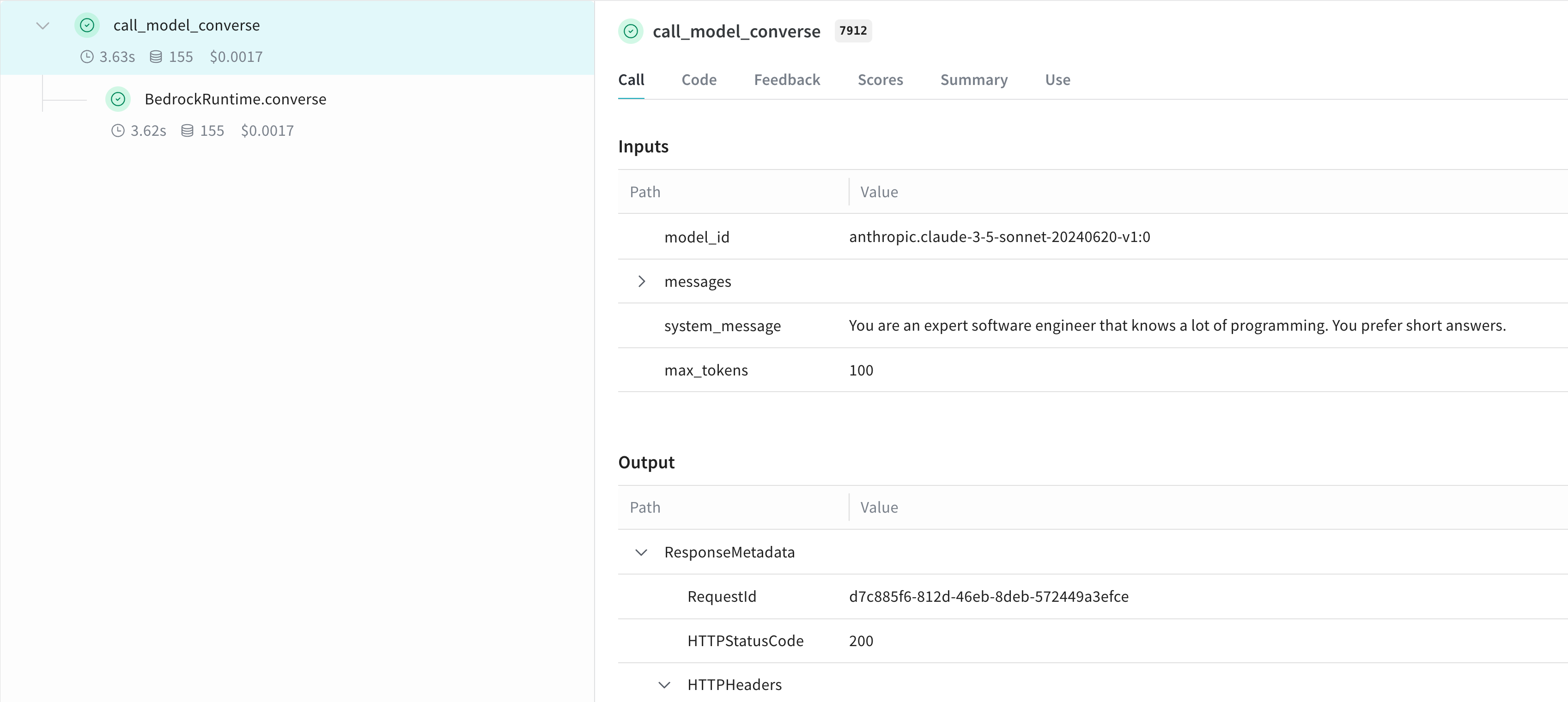

@weave.op

def call_model_converse(

model_id: str,

messages: str,

system_message: str,

max_tokens: int = 100,

) -> dict:

response = client.converse(

modelId=model_id,

system=[{"text": system_message}],

messages=messages,

inferenceConfig={"maxTokens": max_tokens},

)

return response